Many people in the cyber security/defense/IT community are fascinated by the "sexy" work of high-end vulnerability researchers. Often the word "hacker" and someone who can break into any hardened system become confused in modern culture. The people who find so-called 0-day vulnerabilities (vulnerabilities in software that the vendor doesn't yet know about or have a fix for) and turn them into exploits are often looked at the top of the pyramid of hackers due to the incredibly challenging technical obstacles that must be overcome, the deep and arcane knowledge of system semantics and architectures and the obvious intelligence of many of the practitioners of this domain.

The Google P0 team is probably the preeminent public global team researching and publishing novel attacks against hardened systems such as Windows, Chrome, iOS and other software systems critical to the secure usage and survival of the Internet. They are impacting the gray market for vulnerabilities. Other teams conduct this research as a PR function for their product or services firms. Many high end teams are restricted to secretive government (or government funded) laboratories or government agencies to support law enforcement or national security objectives. And a small amount support themselves or a larger criminal syndicate through the development and use of these capabilities. When I did a Google search for vulnerability research, I also found Brene Brown which made me chuckle. (Different kind of vulnerability research!)

Blackhat and many conferences were built around a platform to share the latest and most interesting "hacks" that these researchers have developed. News stories and books are built around the challenging accomplishments of the individuals and research teams. Vulnerabilities come with their own logos and web sites now.

Blackhat and many conferences were built around a platform to share the latest and most interesting "hacks" that these researchers have developed. News stories and books are built around the challenging accomplishments of the individuals and research teams. Vulnerabilities come with their own logos and web sites now.Some members of the community watch admiringly and wish they could do the same. Some enjoy reading/learning about it and admire the technical accomplishments. Others leverage the research to raise awareness around theoretical or ever-real threats to their company/products. While others use it to spread FUD (fear, uncertainty, or doubt) to sell more product or further a political agenda. Many companies benefit from the free research and Q&A that is performed on their products by third parties for no cost that allow them to leverage these discoveries to secure their products without paying for it. (To their credit, many are seeking ways to better engage these third parties and compensate them for those valuable contributions.)

|

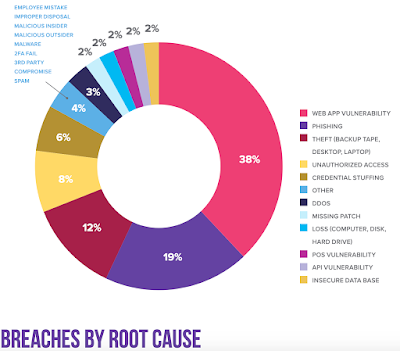

| Graphic from F5 Decade of breaches lessons learned report. |

While I haven't heard the counter argument made publicly (that one should exclusively focus or at least massively increase attention on 0-day vulnerability research) there are certainly individuals and organizations who make this their exclusive focus and have no interest in addressing the human/configuration side of the problem for various reasons. And I have seen individuals in those groups who have denigrated the work of those working on social engineering attacks, auditing systems for compliance and/or rolling out patches.

The problem is that like most complex domains, it is not a boolean problem or a boolean answer. It's complicated and requires a nuanced perspective which is often missing in online rants. In this post, I'll address some of these complexities and explain why we need to address the human/configuration side of the problem while not neglecting the "high end" technical security risks that remain.

Attackers target the human or misconfigured/unpatched systems for numerous reasons:

- It has a low barrier to entry, meaning significantly high portions of the attacker community have access to these techniques (ie, script kiddies, starting out criminal/national state teams, etc.)

- It does not burn valuable capabilities in the event of later compromise. Why spend your 0-day if you don't have to!?

- It is often more reliable. (In the modern era many 0-days rely on probabilistic techniques like heap spraying which fail a portion of the time depending on the usage/configuration of memory in the target.)

Decades ago this was commonly performed by individual hackers who found vulnerabilities and didn't share them but used them to poke around and "explore" the Internet. Reporting a discovered vulnerability to a vendor could result in the police being called or lawsuits and many hackers were young and didn't think they were "causing any harm" or even wrong for using what they'd found for their own entertainment.

But today many firms have vulnerability reporting programs and policies of working with third party researchers. Most of the top software companies in the world even offer some sort of compensation (cash, prizes, or recognition) to these third party researchers through the use of internal or external bug bounty programs (A great list is here.) The combination of maturing software development practices, productive pathways to reporting third party discovered vulnerabilities and anti-exploitation mitigating techniques available in modern operating systems and hardware means that finding useful 0-days and exploiting them typically requires a significant effort by an advanced individual or team of individuals.

Attacks are conducted using BOTH approaches on a daily basis around the world. While reports and news stories getting attention focus on breaches that utilized one or more 0-day attacks, the vast majority are done using human/system mistakes. 0-day attacks tend to be utilized in the highest value or extremely targeted cases by nation states conducting intelligence operations although in less frequent cases by law enforcement, or "defense" operations. A non-negligible portion of 0-days are deployed by criminal groups (although in an era when North Korea employs large teams of hackers to raise billions to bypass national sanctions and fund weapons/missile research,Russian Business Network as long as they target other countries, drawing the line between criminal group and nation state operations becomes increasingly difficult!)

Attacks are conducted using BOTH approaches on a daily basis around the world. While reports and news stories getting attention focus on breaches that utilized one or more 0-day attacks, the vast majority are done using human/system mistakes. 0-day attacks tend to be utilized in the highest value or extremely targeted cases by nation states conducting intelligence operations although in less frequent cases by law enforcement, or "defense" operations. A non-negligible portion of 0-days are deployed by criminal groups (although in an era when North Korea employs large teams of hackers to raise billions to bypass national sanctions and fund weapons/missile research,Russian Business Network as long as they target other countries, drawing the line between criminal group and nation state operations becomes increasingly difficult!)or Russia explicitly refuses to shut down criminal operations out of the

Attackers will use the path of least resistance to accomplish their objective. In a perfect world humans would not be susceptible to manipulation and sharing passwords or other sensitive data. And software would be free of bugs and vulnerabilities. Systems and networks would always be properly configured. But that world is far away and I would argue theoretically unachievable. (Although I have yet to gather the methodology for a proof, I'm working on it!)

As a result, we are faced with a world with vulnerable software, systems/networks and humans. And attackers who spend the minimal amount of resources to accomplish their objectives. In that environment, defenders should focus their efforts on ways of increasing the cost to an attacker that is consistent with their threat model. If you're an individual or small/medium sized business (SMB) not in a high-risk class, you don't need to worry about targeted 0-day attacks and should focus more on phishing-style threats, reducing your threat surface and patching. If you're an elite government agency or global Internet powerhouse, you should invest in the full panoply of security measures including internal/external red teaming, vulnerability research programs, human testing, secure coding programs, multi-tiered security layers, robust secure operations centers with visibility into each layer, deception measures in the network, customized locked-down software stacks, investments into new architectures and mitigations, etc.

Individuals and specialized research shops will continue to exist and advance the objectives of these groups. If someone is a vulnerability researcher (VR) they aren't going to suddenly start offering phishing training to individuals, even if that was the highest payoff security measure for the organization who employs them because the role wouldn't be interesting to them and would squander their abilities. They'll just change employers or take a mundane position and do this as an evening hobby. Similarly, we shouldn't force phishing training experts to become VR experts just because there is a need if staring at hexadecimal and decoding heap structures isn't something that fascinates them and they have an aptitude for.

To state more succinctly, attackers will continue to exploit BOTH classes of vulnerability (software vulnerabilities and human weaknesses/system configuration) as required for their objectives, and improving the security of BOTH while properly understanding our risk is critical. Doing that in a quantitatively robust way is currently impossible since we're still grappling with how to quantify both classes of risk, but heuristics and other measures are appearing so we can at least approximate it. (Example papers on quantifying phishing, vulnerabilities) Researchers continue to publish papers looking at trying to quantify/model these actors as game theoretic problems using things like attack graphs with limited practical success. (Random example)

The larger question about the allocation of resources (People, money, etc.) needs to be addressed at the policy level. As long as companies can knowingly sell software that has known vulnerabilities in it and is insecure by default configuration, we will have massive security breaches. As long as enterprises build/buy solutions that depend on everyone in their organization never making a bad security decision and having to analyze false web sites or phone callers to detect falsehoods, we will have humans being exploited. As long as we have millions of job openings for security professionals, we will remain understaffed and dependent on untrained operators and insecure code.

To see security postures change significantly requires measures across the entire spectrum. Changing the hardware and underlying software our platforms run on. Writing more secure code. Shipping systems securely by default. Automating testing and management. Training more users and security professionals. Buying security products that don't suck and work together to provide a complete picture. Embracing creative defensive approaches like dynamic defense (and "defending forward", whatever that means?) Quantifying everything and making rational decisions. To date we keep spending more money each year but still haven't seen a reduction in breaches... and we aren't going to by denigrating people in the community plugging different holes in the dike than we are.

To see security postures change significantly requires measures across the entire spectrum. Changing the hardware and underlying software our platforms run on. Writing more secure code. Shipping systems securely by default. Automating testing and management. Training more users and security professionals. Buying security products that don't suck and work together to provide a complete picture. Embracing creative defensive approaches like dynamic defense (and "defending forward", whatever that means?) Quantifying everything and making rational decisions. To date we keep spending more money each year but still haven't seen a reduction in breaches... and we aren't going to by denigrating people in the community plugging different holes in the dike than we are.